California has enacted new youth-focused digital safety laws affecting AI chatbots, app ecosystems, and social media platforms which may impact product design, user experience, and data practices across the mobile ecosystem. MEF CEO Dario Betti explores what this could mean for operators, developers, and platform partners navigating the evolving regulatory landscape.

California has signed into law three measures targeting youth interactions with AI chatbots, app ecosystems, and social media.

Signature from Governor Newson came in October 2025, while implementation dates are staged across 2026–2027, the changes are likely to influence national product design and compliance practices given California’s market size and the technical complexity of state-specific builds.

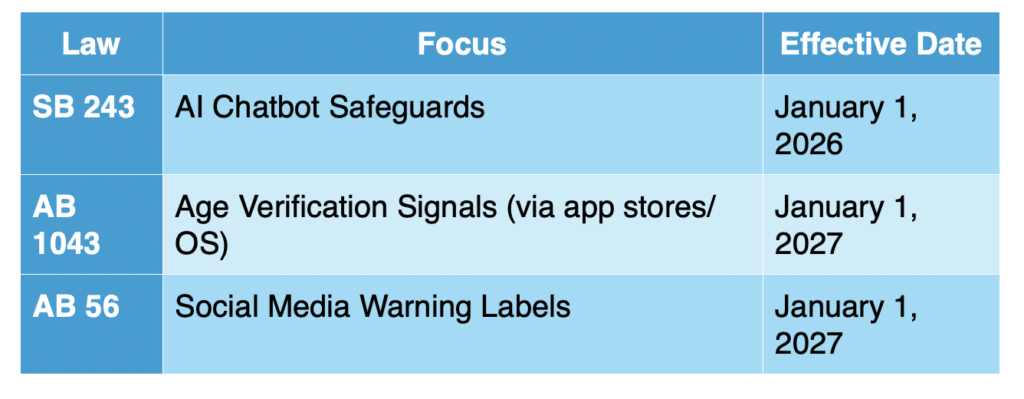

Overview of the laws

SB 243 (AI Chatbot Safeguards) takes effect January 1, 2026. It applies to AI chatbots that provide adaptive, human-like responses capable of meeting users’ social needs. The law excludes traditional customer service chatbots, certain video game features (if they avoid topics like mental health, self-harm, sexual content, or unrelated dialogues), and standalone devices such as voice-activated virtual assistants.

Key requirements include clear disclosure that the chatbot is not a person; protocols to address suicide ideation or self-harm content; specific protections for users under 18 (e.g., disclosures, periodic reminders, and prohibitions on sexually explicit content or suggestions); and documentation/reporting of safety metrics, including crisis referrals. Remedies include injunctive relief and statutory damages of at least $1,000 per violation (or actual damages), plus attorneys’ fees.

Mobile network operators (MNOs), mobile service providers, app developers, AdTech firms, messaging providers, and platform partners face impacts that span identity, UX, safety systems, and data governance.”

AB 1043 (Age Verification Signals) takes effect January 1, 2027. App stores and operating systems must support account-level age indication in four bands (≤12, 13–15, 16–17, 18+). Developers will receive an age “signal” and are deemed to have actual knowledge of a user’s age range upon receipt. The law restricts sharing of age data to legal-compliance purposes. Enforcement lies with the Attorney General, with penalties up to $2,500 per affected child for negligent violations and $7,500 for intentional violations.

AB 56 (Social Media Warning Labels) also takes effect January 1, 2027. For users under 18 on covered social media platforms, warning labels must display:

- Initial warning: at login/first access for at least 10 seconds (unless closed), covering at least 25% of the screen.

- Periodic warning: after 3 hours of cumulative use and at least every hour thereafter, displayed for at least 30 seconds, covering at least 75% of the screen.

- Required wording: “The Surgeon General has warned that while social media may have benefits for some young users, social media is associated with significant mental health harms and has not been proven safe for young users.”

Exclusions include services whose primary function is e-commerce, cloud storage, email, certain direct messaging, internal communications, and private collaboration.

Key Dates

Implications for the mobile ecosystem and MEF members

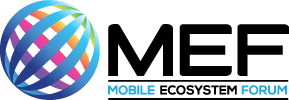

Mobile network operators (MNOs), mobile service providers, app developers, AdTech firms, messaging providers, and platform partners face impacts that span identity, UX, safety systems, and data governance. Because AB 1043 relies on platform-level age signaling and AB 56 requires timed warnings, much of the implementation work will involve OS vendors, app stores, and large social platforms—yet downstream developers and service providers will need to adapt to new signals and constraints.

- Platform age signaling and developer workflows (AB 1043)

- App stores/OS: Must design, operate, and secure account-level age categories and expose them via APIs/SDKs. This includes cross-device/session consistency, parental account flows, and privacy/security controls.

- Developers (MEF member apps): Treat received age bands as “actual knowledge.” Expect to implement age-aware UX, content gating, feature throttling, default privacy settings, and stricter data minimization for minors. Contracts with vendors and SDKs will likely need amendments to restrict onward sharing or secondary uses of age data.

- Data governance: Age signals become high-sensitivity data. MEF members in analytics, attribution, and monetization should prepare to segment processing paths or disable certain features for under-18 cohorts to comply with non-sharing and purpose-limitation requirements.

- Social media UX and time-tracking requirements (AB 56)

- Time tracking: Platforms must measure cumulative use for under-18 users across sessions and, ideally, devices. This may necessitate persistent identifiers tied to age, with careful handling to avoid expanding tracking surfaces beyond compliance needs.

- Warning experience: Engineering work to display interruptive, accessible warnings that meet duration and screen coverage thresholds. Localization, accessibility compliance, and performance on lower-end devices will matter for mobile UX.

- Age detection interplay: Effective operation depends on reliable identification of under 18 users. Expect alignment with AB 1043 age signaling to reduce false positives/negatives. Users that are ‘Logged out’ or ‘guest modes\ may require separate logic.

- AI chatbot classification and safety (SB 243)

- Coverage decisions: MEF members deploying conversational AI need to assess whether their bots qualify as “meeting social needs.” Where coverage applies, implement bot disclosure, self-harm/suicide escalation protocols, and under-18 protections (including strict sexual content filters).

- Safety stack: On-device and server-side classifiers for content harm detection; human-in-the-loop escalation; integration with crisis services; and audit-ready logging/reporting of interventions and referrals.

- Product boundaries: For excluded categories (e.g., customer support bots), ensure technical and UX separation from socially oriented chat features to avoid reclassification.

- Advertising and monetization

- Targeting constraints: With actual knowledge of age ranges, app developers and AdTech partners may need to reduce behavioural targeting, profiling, or certain personalized recommendations for minors, especially younger bands.

- Measurement/attribution: Some SDKs may require age-aware modes that limit data collection or modelling for U18 users, impacting campaign optimization and ROI reporting.

- Identity, consent, and parental involvement

- Parental controls: While not mandated explicitly in these statutes, age-band infrastructure will likely intersect with parental consent/controls for under-13s and teen safety features. MEF members offering family or youth products should anticipate expanded guardian workflows.

- Cross-jurisdictional consistency: Many mobile products will choose to standardize experiences nationally to reduce fragmentation, which could propagate California’s requirements beyond state borders.

- Compliance operations and evidence

- Documentation: SB 243’s reporting expectations and AB 56’s UX timing/coverage thresholds will push teams to maintain telemetry, QA artifacts, and audit trails demonstrating compliance.

- Vendor oversight: Contracts, DPAs, and SDK agreements may need revisions to prohibit non-compliance sharing of age data and ensure downstream adherence (especially for measurement, notifications, and content delivery partners).

- Timelines and sequencing

- 2025: Design/architecture and vendor selection; begin classifier, escalation, and API workstreams.

- 2026: SB 243 becomes effective; AI chatbot safety and reporting should be live. Pilot AB 1043 age signaling integrations.

- 2027: AB 1043 and AB 56 go live; social media warning UX and full age-aware operations should be production-ready.