Digital platforms are growing rapidly in scale and influence, prompting governments around the world to introduce stricter regulations aimed at enhancing user safety, platform accountability, and data transparency. From the European Union’s Digital Services Act to the United Kingdom’s Online Safety Act and emerging frameworks across Latin America, Asia, and Africa, the global regulatory landscape is evolving fast — and in this context, MEF CEO Dario Betti takes a closer look at how these developments impact the mobile and digital ecosystem.

As someone who has spent years watching the digital world evolve, I am struck by how quickly the conversation around social media has shifted from innovation and connection to questions of responsibility and risk.

In the United States, we are now seeing a wave of lawsuits—brought by state governments, school districts, and individuals—challenging the very foundations of how social media companies operate. By 2026, these cases will be front and centre, and their outcomes could reshape our industry.

At the heart of these lawsuits is a deeply human concern: are social media platforms doing enough to protect young people from harm? Plaintiffs argue that flaws in algorithms and platform features are causing psychological and physical damage. But these cases are about more than individual stories—they are testing the boundaries of technology regulation, consumer protection, and whether social media companies can be held liable under the same rules as traditional product manufacturers.

In the USA, courts are wrestling with a fundamental question: are these platforms products, services, or something entirely new? Section 230, the law that has long shielded platforms from liability for user content, is being tested as never before—especially when it comes to algorithms. Meanwhile, state legislatures are pushing for more regulation, and the industry is pushing back. Globally, we see a similar trend toward regulation and risk management, with the EU and UK leading the way with proactive frameworks.

If platforms are seen as products, they could face much broader liability for design defects—just like a car or a toy manufacturer would if their product caused harm.”

As the boundaries of social media are getting blurred reaching out to messaging and conversational commerce MEF members need to be aware of the shift in regulatory sentiment. The message is clear: stay informed, design responsibly, and be ready for a regulatory environment that is changing fast—not just in the USA, but around the world. The stakes are high, and the decisions made in courtrooms today will shape the digital ecosystem for years to come.

What’s at Stake? Platforms, Products, or Services

The central legal question in the USA is only apparently simple: should social media platforms be treated as “products” or “services”? This is not just a technicality. If platforms are seen as products, they could face much broader liability for design defects—just like a car or a toy manufacturer would if their product caused harm.

The debate is far from settled. In 2023, the California Supreme Court ruled against considering social media as products, but more recently, a federal judge in Northern California suggested that some features might indeed be “product components.” This back-and-forth is not just legal theory—it has real implications for how companies design, operate, and manage risk.

Product: Adolescent Social Media Addiction MDL.

Right now, nearly 1,900 cases are pending in the Adolescent Social Media Addiction Multi-District Litigation (MDL) in California. Judge Yvonne Gonzales Rogers has selected 11 lawsuits for bellwether trials, involving both school districts and individual families. These trials, starting in 2026, will help define how US courts view the legal status of social media.

Judge Rogers has already ruled that certain alleged design defects—like weak age verification, ineffective parental controls, and manipulative filters—could be considered “products” or “product components.” But she also clarified that only claims about the design or function of these features, not about how companies respond to user reports, can proceed under product liability law (see Bogard v. TikTok, Feb. 25, 2025).

Services: The Contrasting view from the California Superior Court.

In contrast, a California superior court in 2023 ruled that social media sites are not “products” for liability purposes. The court pointed out that platforms are intangible, interactive, and experienced differently by each user—unlike traditional products, which are uniform and tangible. As a result, the court dismissed product liability claims but allowed negligence claims to proceed.

Platforms: Section 230 and the Limits of Liability

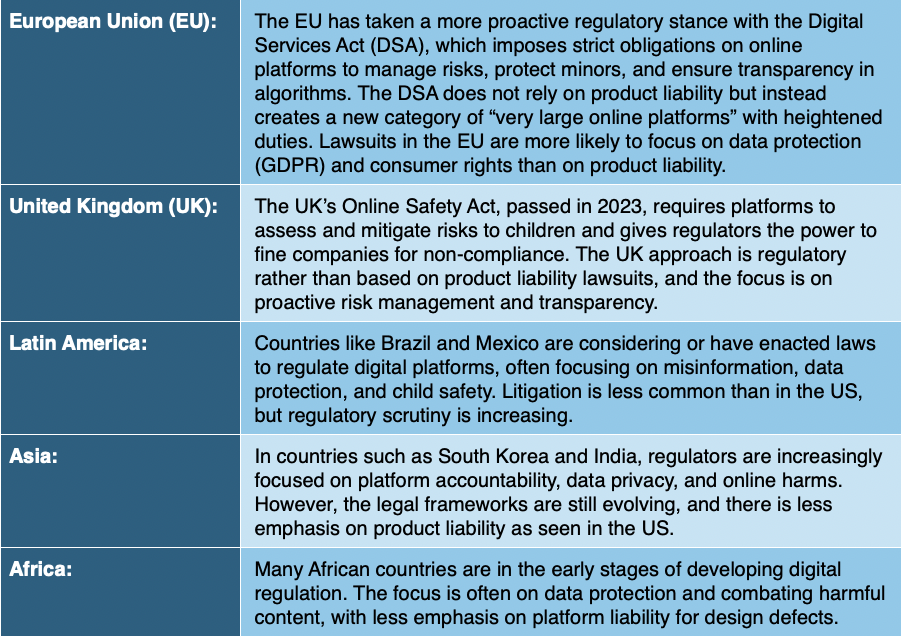

Another major legal battleground in the US is Section 230 of the Communications Decency Act (CDA), which generally shields online platforms from liability for user-generated content. US courts are split on whether algorithmic recommendations are protected by Section 230. The outcome will shape how platforms design their algorithms and how much risk they face from user harms. A few cases are testing the limits of this protection by focusing on platform design rather than content moderation:

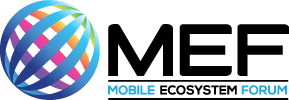

Global Perspectives: How Do Other Regions Compare?

While the US is at the forefront of these high-profile lawsuits, similar debates are happening worldwide, though with important differences in legal frameworks and regulatory approaches. Globally, the trend is toward greater regulation of social media platforms, especially to protect children and vulnerable users. However, the US stands out for its use of product liability lawsuits, while other regions rely more on regulatory frameworks and administrative enforcement.

Implications for the Mobile Ecosystem

For MEF members and others in the digital ecosystem, these legal battles have several important implications:

- Product Design and Risk Management: Companies must carefully assess whether features could be seen as “products” or “product components” and design with safety and compliance in mind, especially in the US.

- Algorithm Transparency: The legal uncertainty around algorithmic recommendations means companies should document how their algorithms work and consider safeguards, especially for young users.

- Compliance with Local Laws: Companies operating globally must monitor legislative developments in each region and be ready to adapt to different regulatory requirements.

- Section 230 Uncertainty (US): The scope of Section 230 immunity is being tested. Companies should not assume blanket protection, especially for design-related claims.

For more information or to join the Insight Group, please contact the MEF team at info@mobileecosystemforum.com.

View the Global Messaging Bans: Summary Table