Meta’s latest Community Standards Enforcement Report offers a long view of how content moderation has evolved since 2017. While high-profile issues like hate speech and nudity often capture attention, the real scale of enforcement tells a different story. MEF CEO Dario Betti explores the numbers, trends, and what they reveal about the challenges of governing global platforms.

The Meta Community Standards Enforcement Report Q2 2025 (covering the quarter ended June 30, 2025) was shared in early September 2025, and it provided a valuable reflection on the trends in social media, as it covers all of Meta apps.

It also provides a review across multiple years Meta began publishing this report in late 2017 (some categories were only added in the 2020s). By now, the numbers can tell a consistent story: the bulk of moderation work is not in high-profile categories like hate speech or violent content, but in the relentless task of tackling spam and fake accounts.

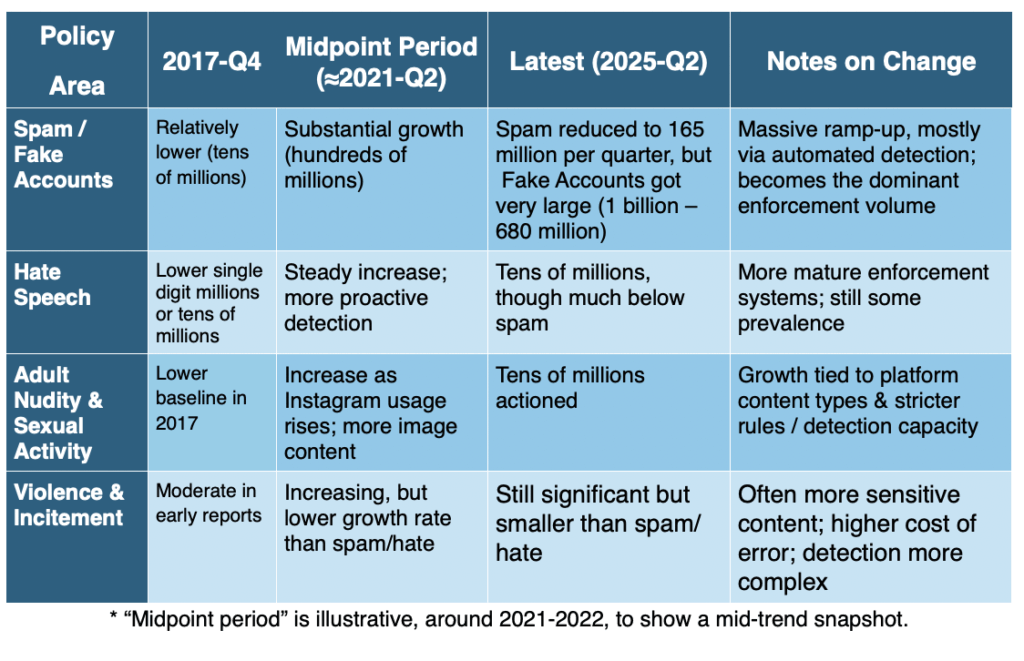

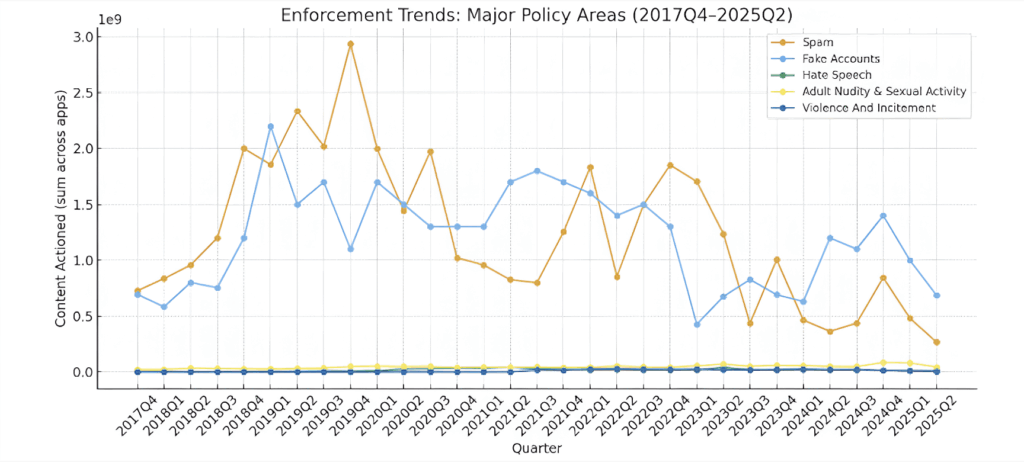

Across the seven years of data, we can see some interesting trends:

- Spam is consistently the largest category, peaking each quarter in the hundreds of millions.

- Fake Accounts also dominates, though its pattern is more irregular (reflecting periodic large-scale sweeps).

- Adult Nudity & Sexual Activity, Hate Speech, and Violence & Incitement have steady but much lower volumes in comparison.

- Child Safety–related categories are visible but remain lower in total numbers.

This long view reinforces that Meta’s moderation is fundamentally a spam/fake accounts problem in terms of scale, while socially sensitive categories (nudity, hate, violence) occupy a smaller but more reputationally critical share.

The Scale of Spam and Fake Accounts

This long view reinforces that Meta’s moderation is fundamentally a spam/fake accounts problem in terms of scale, while socially sensitive categories (nudity, hate, violence) occupy a smaller but more reputationally critical share.”

Together, spam and fake accounts form the backbone of Meta’s enforcement efforts. From the very first quarters, spam has dwarfed every other policy area. Hundreds of millions of spam posts are actioned each quarter, with occasional peaks reflecting surges in automated activity.

However, spam has now been taken over by fake accounts as the dominant enforcement category in the last 18 months. Fake accounts are not just a nuisance but a threat: it is not anonymous accounts, but accounts often created to amplify spam, scams, or influence operations. It is possible to see periodic sweeps removing massive numbers at once. Unfortunately, the reduction in number we saw up to Q1 2024, flared up by Q4 2024 (1.4 billion of cumulative reports across all Meta apps in the quarter), and it has only lowered in the first half of 2025 (to 687 million in Q2 2025). Meta seems to be still used for fake / ‘fraudulent’ accounts.

The Socially Sensitive Categories

Other policy areas tell a subtler story. Adult Nudity & Sexual Activity, Hate Speech, and Violence & Incitement consistently appear in the tens of millions each quarter. Their numbers are small compared to spam, but their visibility and sensitivity make them central to public debates about free expression, cultural norms, and political speech. Unlike spam, enforcement here involves a more delicate balance between protecting users and avoiding overreach.

- Hate Speech shows steady actioned volumes and high proactive rates, but it remains one of the most scrutinised areas given its social and political implications.

- Nudity has high volumes, particularly on Instagram, where image-sharing naturally increases exposure to borderline cases.

- Violence & Incitement is more stable, with low reported prevalence (a small percentage of content seen by users), but enforcement still numbers in the millions.

Child Safety and Other Categories

Smaller in absolute numbers, but no less critical, are the child safety categories (sexual exploitation, nudity, endangerment). These do not register the same large volumes, but they remain a focus area for proactive detection technologies and partnerships with law enforcement. The number of Content Appealed cases in Q2 2025 for Child Endangerment: Nudity and Physical Abuse increased 24% from the previous quarter, but also 142% from a year before. The higher ability of detecting content is at play, but it shows absolute numbers that are higher ( 202,000 pieces of content in Q2 2025).